Vibe Coding: Magic - until it isn't

2025-07-25

It's 2025 and I'm a technologist with a blog, so of course I have to write a short essay about vibe coding.

Level Setting

Popularized by Andrej Karpathy, vibe coding is an approach to software development using AI that can best be described as "outcome driven development". Instead of worrying about the how, you worry about the what and let the increasingly impressive large language models (LLMs) do the rest.

Arguably the most important element to vibe coding is the magical Always Allow option. You must accept all of the output of your tool and if it breaks, don't panic, just paste the error in and copy the fix.

For example, maybe I want to build a website that tracks the S&P 500 in the style of Windows XP. I open my tool of choice and tell it:

Create a website that tracks the S&P 500 index. It should include useful statistics, charts and price history. The website should be designed in the style of Windows XP, including a start bar and icons on the desktop.

A short while later, possibly after giving some further guidance and direction, out will pop a website that tracks the S&P 500 in the style of Windows XP. Sounds awesome, right?

The Good

Well - yes, it actually is pretty awesome. As with all things LLM, it won't be completely accurate and might hallucinate, but all-in-all it is a fairly reliable experience.

Fail fast on ideas

Let's say I have an idea for some project and I want to figure out if it is even worth thinking about further. A few years ago, I would perhaps open an IDE, use some template project and start to put things together. Usually, it would be a pretty non-functional PoC that took a couple of hours to bash together. Nowadays, I can just describe the idea to a cli tool and have something in anywhere from 5-60 minutes.

This allows my idea churn rate to be much higher - if something just isn't working, I can dump it, and if it is, I can think about how to take it further. I think this is a good thing for creators, technical and non-technical alike, and allows people to find the good ideas a little bit more efficiently.

I don't have to write UI code any more

Okay, maybe that's a bit of an exaggeration. But it's not an exaggeration to say I find frontend development to be frustrating more than fun. It's almost certainly a skill issue, but nonetheless I am glad to let some AI handle the bits of code I don't enjoy writing, while I can focus the bits I do. The reverse is true for frontend devs too - don't want to spend ages figuring out how to scaffold an authentication system? Just vibe code it, dude.

This is obviously a terrible idea. I am getting ahead of myself here, but please never let AI handle something so critical. To be honest, you should probably just use an established open-source solution.

It's actually often quite fun

The novelty of describing something and having a black box spit out a working project still hasn't worn off for me. I am still sometimes amazed at the absurd requests AI will prototype for me. Also, I can add things to projects that I would've otherwise put off because I'd think "That sounds really painful to code, though".

The Bad

Infinite loops

Sometimes I'll face a bug or issue, describe it to my tool, and watch as it loops between understanding the problem, fixing it, realizing it's not fixed, understanding the problem, fixing it, and so on. There's a 50% chance that if I close and re-open the tool, it might solve it without a polluted context, but there's a 50% chance it will get stuck in the same loop. Inevitably, in those cases, I have to debug it myself. So much for my zero-effort billion dollar startup.

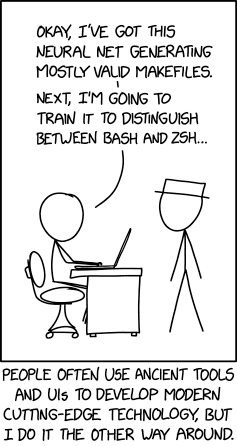

Bias

AI is only Intelligent (debatable if we are being pedantic) because it is trained on huge amounts of data. Naturally, this means that if there is more of one thing in its training set than another, it will more often generate it. This means that when you're asking a model to do a task, it will have a "favourite" way to do this, be it a particular framework, structure or format.

This leads to technology homogeneity, or in non-fancy terms, everything using the same thing. I believe that innovation exists on the edges, and if we are converging towards some common technology this stifles innovation.

The Ugly

It's worth saying that these are true at the time of writing. Maybe they're not true at the time of reading. Please don't send me angry emails if that is the case.

Cost

Financial

The state of affairs here is peculiar. If I buy Claude Pro, I can send 45 messages every 5 hours, or 216 per day. If I use their API, $20 gets me a little over a million tokens for both input and output. If you have paid attention to your model usage stats in whatever tool you use, you'll notice that this is actually quite low - you are likely to exceed that in a month. So how can they afford it?

Well, I don't think they can. And this isn't just true for Anthropic - Google's Gemini CLI has a suspiciously generous free tier which gives you 100 free requests per day. We are in the phase of this kind of tooling where the big players are all focused on market capture - make developers feel comfortable and integrated into the ecosystem, maximise that Daily Active Users (DAU) and call it a successful day.

However, even companies with as big a war chest as Google can only play this game for so long, so I predict that within the next year or two we will see sharp increases in the cost of using these tools unless the resources required to run these models decreases by a large factor. That, or they will charge extortionate prices to enterprise customers. After all, electricity isn't free...

Environmental

...and it certainly isn't good for the planet. The UN says AI has an environment problem. One paper claims that AI will soon consume 6x more water than Denmark. So with that in mind, is it such a good thing that I can burn through tokens at a low cost (or for free) to build a Windows XP style S&P 500 tracker for fun instead of just Googling it?

Risk

Hallucination

LLMs predict the most likely token after the preceding tokens. They can do it so well that they can write eloquent passages, code complex systems and argue with me about whether or not the visual bug I am seeing is in my head or not. However, that doesn't really qualify as intelligence in my book, and it's most obvious when it hallucinates.

Maybe it will try to use a function that doesn't exist, or a library that sounds authentic but is completely invented. This can be annoying at the best of times, but if people are using these models to write code on the critical control flow, or even worse, in aspects of the codebase which are related to security (authentication, authorization etc.) it can be deviously dangerous. If you're lucky it won't compile, but if you're unlucky you may not even know that it has made a mistake until you're hacked.

Copyright

Copyright infringement in LLMs is a well documented topic that I don't intend to rehash. Just know the same applies for vibe coding - in the worst case, you may not even have the right to use what the model is outputting commercially.

Cognitive decline

A recent study out of MIT found that individuals who use AI assistants in essay writing experience a weaker brain connectivity than those who just use Google. Interestingly, even after switching away from AI, they experienced lingering under-engagement. Meanwhile, those that did not use AI but switched to it experienced a reduced cognitive impact.

I can believe that AI use can have an effect on the brain. I am using programming, problem-solving and critical thinking notably less when I use vibe coding tools, and this can only lead to my skill set "getting rusty". I think this will be most prominent in junior developers who have not yet burned some of these habits and ways of thinking into their psyche, however no one is immune.

Conclusion

Almost 1,500 words later, I have to say I recommend that you try vibe coding. I'd suggest Gemini CLI since it is free and you'll probably not exceed its limits in most cases. Have fun with it - get it to automate tasks you hate doing or whip up a prototype of some novel idea.

However, treat it like you would an entry-level colleague you have delegated something to. If it is touching code which is important, review it or do it yourself. Double check the diffs to make sure nothing unexpected hits production. And don't expect it to perform miracles. It is, after all, just the natural evolution of predictive texting.

Disclaimer

The opinions shared in this post are my own. They may be ill-informed, incorrect or just plain stupid.